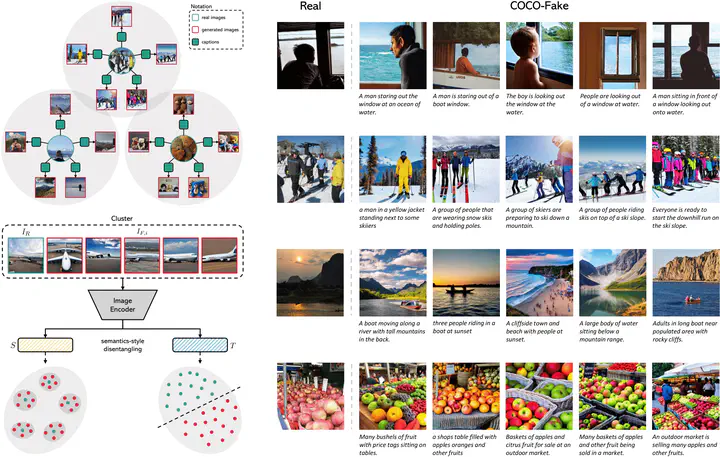

Overview of our multimodal disentangling semantics and style for deepfake detection, in which five subsets of the semantics contained in a given image are employed to generate as many fake images.

Overview of our multimodal disentangling semantics and style for deepfake detection, in which five subsets of the semantics contained in a given image are employed to generate as many fake images.Abstract

Recent advancements in diffusion models have enabled the generation of realistic deepfakes by writing textual prompts in natural language. While these models have numerous benefits across various sectors, they have also raised concerns about the potential misuse of fake images and cast new pressures on fake image detection. In this work, we pioneer a systematic study of the authenticity of fake images generated by state-of-the-art diffusion models. Firstly, we conduct a comprehensive study on the performance of contrastive and classification-based visual features. Our analysis demonstrates that fake images share common low-level cues, which render them easily recognizable. Further, we devise a multimodal setting wherein fake images are synthesized by different textual captions, which are used as seeds for a generator. Under this setting, we quantify the performance of fake detection strategies and introduce a contrastive-based disentangling strategy which let us analyze the role of the semantics of textual descriptions and low-level perceptual cues. Finally, we release a new dataset, called COCO-Fake, containing about 600k images generated from original COCO images.